System Identification¶

Preface¶

I started to think about system identification when I arrived at Delft and the discussions began with Arend and Jodi. I hadn’t much clue about the formal field and theory of system identification. My first look at it was when Arend presented me with a light introduction by Ljung, so I credit Arend and his cohorts for the ideas that have been implemented in this chapter. We talked about it here and there but never really put together a solid experimental plan to do anything about it. Karl Åström visited us in Delft in late 2008 and we talked some with him about system identification of the bicycle. I mainly recall him focusing on how to excite the system in a very controlled manner with an oscillating mass on the bicycle. Some of these ideas propagated through Arend to Peter and can be seen in the final sections of his thesis [DL11]. Many of these ideas influenced our NSF proposal and ultimately the final portion of what I was to do for my dissertation work.

Once I had gotten back to Davis we now had the resources available from NSF funding to make something happen. My goal had basically formalized into creating a instrumented bicycle to be controlled by a person that was capable of measuring all of the kinematics and kinetics involved in regulated control tasks. We also originally had hoped to be able to vary the dynamics of the bicycle, but the reduced funding nixed that idea. It took some time into the project to really understand what we might be able to accomplish with that but it finally materialized into validating Ron’s theoretic control model which is discussed in [HMH12] and Chapter Control with data collected via the instrumented bicycle. After our first experiments, over a year into the project timeline it became apparent that simple tasks with measured lateral perturbations would provide the best chance of validating his model. Unfortunately, I had not thought a great deal about how to provide and measure these lateral perturbations but the manually excited perturbations seemed to do the trick.

We ran a lot of preliminary system identification analyses on the first set of trial data, but it quickly became apparent that we had little understanding of the subject. The early analyses did give confidence that the data was of good enough quality to do something with, but our goal of identifying the parameters of the controller were still far from our reach.

We performed the final set of experiments around August and September of 2011 to get a large sample of data for the final analyses. The NSF grant was to end at the end of September and I still had to figure out how to analyze all of the data, not to mention write up a ton of work for my dissertation. We ended up extending the NSF grant another year (as seems to be typical with these things). I look back to our original proposal and in hindsight the scope was way too large (accurately predicted by Arend). We nixed the handling qualities parts when the funding was lowered, but I now see that what we hoped to do really took another 6-12 months longer than we had intended.

The final analyses have forced me to figure out what system identification is all about and I’ve learned a great deal rapidly and much on my own. At this stage we weren’t able to find any local experts on the subject to help us along but I’ve gotten some great insight from both the single track vehicle dynamics email list and from personal communication with Karl Åström. I still feel very weak in the subject but it is more clear how difficult identifying complex systems is, especially trying to nail down physical parameters.

As many doctoral students probably hope when starting their long trek to the PhD, I hoped for some grand findings to arise from this work. But I’ve been humbled a lot in that quest. I present here the work I’ve done with regards to identifying the bicycle and rider system with what I think are good results, but I hope that it can be a guide for others to see some of the difficulties in executing this kind of analysis with some ideas to better structure it.

Introduction¶

The work presented in this Chapter is intended to be the icing on the cake that takes into account all of the theory presented in the previous chapters and evaluates it with a large set of data taken with the instrumented bicycle described in Chapter Davis Instrumented Bicycle. It is also the overarching deliverable I was responsible for under the NSF grant. This chapter details system identification of a bicycle and rider system. It is broken in two main parts, the first goal being to identify the passive bicycle rider system and the second to identify the active portion, i.e. the rider’s control system. The literature review gives an introduction to other’s efforts in both these analyses with respect to single track vehicles.

Two important concepts need to be mentioned briefly before proceeding. The first is a review of the terms used to categorize model structures in the system identification field: white box, black box, and grey box. System identification is the process of identifying a model that best predicts a particular input/output relationship. If we develop a model entirely from first principles[1], e.g. using Newton’s laws to describe the motion of an object, when all of the parameters and states are known then this is called a white box model because we know everything about it a priori. The second case, the black box model, describes a model in which one only knows the structure, i.e. order, of the underlying equations but all the coefficients are unknown. Finally, the grey box model falls in between the first two in that one may have more knowledge of the structure of the coefficients based on first principles, e.g. one may know that some model coefficients are zero or that the model may be parameterized by some known and some unknown physical characteristics.

The second concept regards the notion of process and measurement noise in a dynamic system. Dynamic systems have states that evolve dynamically through time, but a real system has elements that are not purely deterministic which are typically called noise. The noise can be broken up into the process noise, the noise due to errors in, or random inputs into, the model, and the measurement noise, the noise due to errors in the measurements. The ability to characterize these two types of noise plays a large role in the accurate identification of dynamic systems.

Literature¶

There is a rich history of bicycle and motorcycle mathematical model development. This has been able to explain many of the more dynamically fascinating phenomena, from countersteering and stability to speedman’s wobble and gyroscopic effects. But the amount of experimental validation of these idealized models pales in comparison, with the motorcycle experimentation outdistancing that done with bicycles.

Basic bicycle and motorcycle identification is typically done on data collected by exciting the vehicle through force/torque perturbations in either roll or steer. These experiments can be done when the bicycle is under closed loop control or when the bicycle is stable, the former being a requirement for speeds outside of the stable speed range. But, the mode excitation methods are limited to the frequency band around that mode of motion. Manual excitation under closed loop control gives better excitation bandwidth and a pairing with modern system identification techniques can provide richer models.

Passive Vehicle and Rider Identification¶

Identification of passive, open loop vehicle-rider models is more prevalent than the identification of the controller. It is indeed a requirement to have a very good vehicle model before attempting to identify the control system a human employs while controlling the system. The bicycle and motorcycle are excellent choices for manual control experiment design due to the fact that they are relatively economical systems that require a broad range of human control skills to stabilize and direct the vehicle, but they have the disadvantage that first principles models are somewhat more difficult to develop and have smaller pool of prior research as say cars or aircraft. The approaches to identifying the passive model include mode excitation techniques to system identification under more general inputs.

CALSPAN¶

The earliest comprehensive bicycle model validation began at CALSPAN in the late 60’s. This included several revolutionary studies, in one of which they made use of a rocket to apply know step torques to an uncontrolled riderless bicycle. In another, simulations of slalom maneuvers were visually compared with video footage [RM71].

Eaton¶

David Eaton’s work ([Eat73b], [Eat73a], [ES73]) may be the closest example to the work presented in this chapter. He did his PhD work at the University of Michigan under the Highway Safety Research Institute. His dissertation focused on the experimental validation of the motorcycle modeling work of [Sha71] and the human controller modeling work of [Wei72]. He did this with two sets of experiments: 1) identification of the uncontrolled dynamics of the motorcycle under perturbations, and 2) identification of the rider controller during roll stabilization tasks. The latter of which will be discussed in the next section.

His initial experiments were aimed at validating and identifying the passive motorcycle system. During these experiments, his subjects road a motorcycle with their bodies rigidly braced to the frame and hands-free at speeds of 15, 30, and 45 mph (6.7, 13.4, and 20.1 m/s) along side a pace car which recorded the output from roll angle, roll rate, and steer angle sensors. The brace and open loop response allowed rigid rider modeling assumptions to be used. Weights were dropped from one side of the motorcycle to induce a step roll torque and the rider used a single pulse in steering torque to the handlebars to right the motorcycle in roll after the drop. These experiments were impressively dangerous and would be hard pressed for approval by the Institutional Review Board if done today, but well designed for the typical modeling assumptions. The resulting time histories of the measured system outputs were compared to simulations of Sharp’s model [Sha71] augmented with a variety of tire models of Eaton’s design. He found good agreement between the experiments and the models for higher speeds, but felt that a more complex tire model was needed to predict the wobble mode in slower speed runs.

The second set of experiments were more tame. The three riders simply balanced the motorcycle on a straight path at two speeds, 15 mph and 30 mph, for a total of 38 runs. He added a steer torque transducer bar above the handlebars. The rider controlled the motorcycle with one hand and the rider applied torque was recorded along with the other signals. No perturbations were necessary, as the rider’s natural control actions excited the system in a wide enough bandwidth. From this data he was able to identify the motorcycle steer torque to roll angle transfer function through the spectral densities of the measured signals (by dividing the cross spectrum of the roll angle and steer torque signal by the power spectrum of the steer torque). The identified transfer functions show good agreement with the augmented Sharp motorcycle model at the 30 mph speeds, but less so for the 15 mph runs.

His generated frequency responses from the second experiments provided an empirical model, while the simulation comparisons from the first experiments were validation rather than identification.

Weir, Zellner, Teper¶

Weir, Zellner, and Teper performed an extensive experimental study on motorcycle handling qualities for the U.S. National Highway Traffic Safety Administration in the late 70’s, [WZT79]. This was a follow up to both the CALSPAN studies and [Tag75], both under or related to the same Administration. There is little to no explicit system identification in the study but some important elements are there. In terms of the passive model identification, they present steady state comparisons of their experimental data to their models with varying degrees of qualitative agreement and generally good ability to predict the conditions at which sign reversals in torque are needed to maintain a steady turn. They also compare single lane change simulations of a controlled vehicle to their measured data by visual inspection. They unfortunately admit that adjusting the first principles models to better fit their measured data was outside the scope of the project. But this gives some early examples of model evaluation with respect to good quality data.

James¶

Stephen James published a study in 2002 [Jam02] in which he attempted to identify the linear dynamics of an off-road motorcycle. He measured steering torque, steer angle, speed, roll rate, and yaw rate with his subjects manually exciting the vehicle through steer torque during runs at various speeds on a straight single lane road. He made use of black box ARX SIMO identification routines of 6th and 7th order (his and others motorcycles models are usually 10th+ order) to tease out the weave and wobble eigenvalues. He compares the identified eigenvalues, eigenvectors and frequency responses to his motorcycle model and claims good fits based on visual interpretation of the plots. The agreement is questionable due to the lack of statistics in the model comparisons and little validation of his first principles model which assumes a rigid rider. The study does show that there is the possibility of identification of multiple modes of motion with simple manual excitation of the handlebars. He also used these techniques to identify the same motorbike with a single wheel trailer in [Jam05].

Biral et al.¶

[BBCL03] performed a nice study to identify motorcycle dynamics under an oscillatory steer torque input. They measured steer torque, roll rate, steer angle, and yaw rate with an instrumented motorcycle. They performed slalom maneuvers at speeds from 2 to 30 m/s at three sets of cone spacings in the slalom course. The resulting time histories were close to ideal sinusoids. They used curve fitting to find amplitude and phase relationships among the measured signals. The results were plotted on Bode plots for comparison to the frequency response of several first principles models. The models predict the experimental data and their motorcycle model is shown to do a better job than other models from literature. This claim is only based on visual inspection. I would say this technique and others like it are more of an ad hoc method of system identification of the vehicle dynamics because they rely heavily on very specific input and output characteristics, but nevertheless seems to be effective. Making use of formal system identification techniques could potentially give more reliable results and the ability to better characterize the uncertainty in the predictions.

Kooijman¶

Jodi Kooijman has worked on experimental validation of the benchmark bicycle [MPRS07] linear equations of motion for a riderless bicycle [Koo06], [KSM08], [KS09]. His instrumented bicycle measured the steer angle, forward speed, roll rate, and yaw rate. Due to the fact that the bicycle can be stable at certain speeds he was able to launch the bicycle in and around the stable speed range and perturb the bicycle with a lateral unmeasured impulse and record the stable decay in the steer, roll, and yaw rates. The post perturbation time histories of the measured signals provided nice decaying oscillations and curves could be fit to find both the time decay constant and frequency of oscillation. These were then compared to the predicted weave response based on the first principle model numerically populated with measured physical parameters of the bicycle. He found good prediction abilities of the weave mode between 4 and 6 m/s. The “goodness” of fit were gaged by visual inspection with no uncertainty estimates in the models or the results from the dynamic measurements. The method was not able to predict the heavily damped caster mode nor the capsize mode. He also demonstrated that the measured dynamics were the same when the experiments were performed on a treadmill.

In [KMP+11], Jodi constructed a bicycle with very unusual physical characteristics including negative trail and canceled angular momentum of the wheels. He performed similar experiments to his Master’s thesis work. They show the comparison of a single stable experiment in which yaw and roll rates were measured and compared to the predictions of the benchmark bicycle.

[Ste09] and [ER11] both perform experiments similar to Kooijman’s with similar results, although Steven’s results vary in the ability of the model to predict the data for various configurations of his adjustable bicycle.

These also fall into the ad hoc system identification techniques that take advantage of the stability at certain speeds and very specific output characteristics. The variability in reproducibility in the studies from other researchers should be noted.

Chen and Doa¶

[CD10] develop a first principles non-linear bicycle model with a fuzzy controller and use it to generate stable simulations for various speeds. They then do an output error grey box identification on the resulting data with respect to the non-zero and non-unity entries of the state, input and output matrices (i.e. just the entries of the acceleration equations). The identification is done for a discrete number of speeds in the range 1 to 15 m/s. The eigenvalues are calculated of the resulting identified, speed-dependent A matrices and the root locus plotted versus speed.

The resulting eigenvalues seem to behave like the benchmark bicycle but the capsize mode is shown to go unstable briefly at a speed lower than the stable speed range. They did not attempt to characterize or identify the process noise even though they generated the data with a known model with known input noise. Also their non-linear bicycle equations of motion [CD06] were never validated against any other accepted models. Both of these could potentially explain the discrepancies in their identification. Their identification procedure does show that it may be possible to get good estimates of a linear model of the vehicle alone from noisy data regardless of the controller which stabilizes the vehicle.

Doria¶

In [DFT12] experiments are performed where a motorcycle rider excites the steering with a pulse and lets the motorcycle oscillate while the rider keeps his hands on the handlebars (as opposed to Eaton’s hands-free experiments). The resulting dynamical measurements are nice decaying sinusoidal-like motions and the authors fit optimal curves to the data. They identify the time constants, and frequency and phase information to construct the eigenvalues and eigenvectors of the excited mode. The empirically derived eigenvectors show some resemblance to the model’s predictions.

Controller Identification¶

van Lunteren and Stassen¶

At Delft University of Technology in the Man-Machines research group, Drs. van Lunteren and Stassen began work in 1962 to identify the human controller for a normal population of subjects and report on their work into the early 70’s ([VLS67], [VLS69], [Sta69], [VLS70a], [VLS70b], [VLS70c], [VLS73], [SVLB+73]). They chose a bicycle simulator as the plant because it was a common task that average people could do and their studies could focus on a wider population of individuals as compared to most previous work based around trained pilots. The bicycle simulator did not capture all of the essential dynamics of a real bicycle as it’s operation was based on only the simplified roll dynamics of Whipple’s model, but nonetheless offered a similarly complex roll stabilization control task as a normal bicycle would. The simulator was controlled by both the steering angle and the rider’s lean angle, both of which are questionable inputs as have been pointed out as early as [RL72].

They assumed the rider’s control actions can be described by a PID controller with time delays on each feedback variable and mention that this controller was chosen instead of a McRuer style controller primarily due to limitations of their computational equipment. The error in the roll angle is fed into two PID controllers each with a time delay: one to output the corrective steer angle and the other to output the corrective lean angle. They introduce a remnant term for each control action and the external disturbances to the bicycle model.

The identification goal was to find the six gains and two time delays in which the controller performed as a human would. The preferred method was a real time estimation routine due to the speed of computations and reasonable agreement their correlation method. The results indicated that the subjects used no integral control (i.e. only position and rate feedback). They could identify within a bandwidth of about 2 Hz and noticed that when the system was undisturbed there was a 0.5 Hz dominant frequency in the rider’s control actions. The rate feedback was more dominant in generating the lean control input than it was for the steer control input. Also, they found the time delay for lean to be larger than the steer time delay and postulate that the steer action is a result of cerebral activity while the lean is more of a reflexive pattern. Another finding resulting from analysis of Nyquist plots of different riders’ identified control actions showed that riders chose different control actions. They attribute this to the roll stabilization being a sub-critical task (i.e. a more difficult task may force different riders to adopt similar control behavior). They also investigated the effects of drugs, such as alcohol, on the riders control behavior. They found correlations from drug dose to time delays and the error in the control actions. Their later studies introduced better identification methods and they found discrepancies in the identified time delays of the later work as compared to the previous work. For example, the steer control time delay was originally found to be around 1.5 seconds and the improved methods found the delay to be around 0.7 seconds. The discrepancy was attributed to the bias due to remnant in their early work. They also introduced a visual tracking task into the simulator but had difficulties in getting reliable transfer function identification as compared to the roll stabilization transfer functions which improved in quality due to longer trials of 35 minutes.

The methods developed in their studies are excellent and thorough examples of early parameter identification in human control tasks. The simpler plant dynamics were most likely beneficial in reducing the uncertainty in the identified parameters, but the choice of angles as inputs instead forces of torques may not be a realistic enough model of the human’s actuation.

Eaton¶

After feeling confident in his motorcycle identification results, Eaton made use of the Wingrove-Edwards ([WE68], [WEC69], [Win71], [Edw72]) method in tandem with an impulse identification to identify the human controller. The remnant element was large with respect to the torque that was linearly correlated with the roll angle, but the human control element was identified with a simple gain and time delay for most of the high speed runs. The time delay identification of about 0.3 seconds was very repeatable across all runs. Furthermore, he demonstrated that the crossover model was evident in the resulting closed loop rider-motorcycle transfer functions.

Eaton is one of very few who have identified the rider controller during actual single track vehicle tests with confidence in the underlying passive rider-vehicle model. This study has influenced the work in this chapter in many ways.

Doyle¶

A recently uncovered study on the manual control of a bicycle from a psychologist’s perspective has some very non-traditional techniques and outlooks for the understanding of the control system employed while balancing a bicycle [Doy87]. Anthony Doyle’s paper [Doy88] on his thesis topic opens with “The old saw says that once learned it is never forgotten, but what exactly is learned has been by no means clear.” This reflection points to the great complexity behind balancing a bicycle, such an easily gained skill. He chooses to study the bicycle over a simpler task partially due to the fact that the rider has little freedom in effective control strategies and partially because it is a skill many people can do.

His goal was to determine how much of the rider’s control actions can be accounted for without involving higher cerebral functions. He mentions the Weir and Zellner work and the fact that its focus is on motorcycles at high speed, and questions whether the control employed for their system is simply a different version of the one employed on a bicycle at low speed or whether they are different control methodologies altogether.

He was aware of the inherent stability that bicycles can provide and constructs an instrumented bicycle where the head angle, trail, and front wheel gyro effects are eliminated so that “all steer movements are a result of the human’s control”. He also mentions, but doesn’t use, a body brace to eliminate unnecessary body movements and he blindfolds his subjects so that their sensory information is limited to proprioception and vestibular cues. He mentions the arm and upper body movements and how it is difficult to tease out the deliberate movements versus the passive dynamics of the body. With the instrumented bicycle he conducts low speed steady turn and balancing tasks and measures speed, roll rate, and steer angle.

Along with the experimental data, he developed a bicycle and rider model with accompanying controller. The derivation of the bicycle model is questionable due to the non-traditional methods, but he does end up with a model which behaves like a bicycle including speed dependent stability. He is aware of the need to roll the bicycle frame in the direction of the desired turn for directional control and how counter-steering plays a roll in this. This concept leads to the primary inner loop being chosen as roll control and his control structure resembles that of Weir’s work in terms of sequential loops. He cites the crossover model and is aware that humans can adjust their gains as needed for good performance. The controller is traditional in most senses and follows the patterns by McRuer, Weir, and Eaton, but he adds in the ability to add discrete pulses to the roll angle. He feeds back roll acceleration and integrates it to get roll angular velocity. This is basically a continuous PD control on roll rate. But his non-continuous addition to the controller is based on a fuzzy logic-like rule “Make a pulse against the lean whenever it gets bigger that 1.6 degrees.”

It seems like he gets somewhat close matches to the experimental traces from his control model simulations without the discrete pulses, but then adds in pulses (single or multiple) to the steering so that the traces matches more closely. His identification technique and criterion is focused around a detailed examination of the patterns in the time histories in a very qualitative way.

His results focus on the evidence for intermittent control and finds the traditional gains to be inversely proportional to speed. He claims the balancing part of the control system is done primarily in the lower cortex.

To me, Doyle’s work emphasizes the need for close collaboration between psychologists and control engineers to formalize the theory for human balance. His intermittent control theory may be valid, but due to the unusual model development, simulation and analysis techniques it is hard to gauge whether the need for intermittent control was simply an artifact of poor vehicle modeling. His insight into the human control theory is very enlightening and his ways of wording bring the theory outside the traditional control framework for an expansion in understanding.

Lange¶

Peter de Lange’s recent Master thesis work [DL11] focused on identifying the rider controller from the data that he helped us collect while interning at our lab. He used the Whipple bicycle model, a simplified second order representation of the human’s neuromuscular dynamics (natural frequency 2.17 rad/s and damping ratio of 1.414[2]) and a PID like controller with a 0.03 second time delay. The controller structure had gains proportional to the integral of the angle, the angle, the angular rate and the angular acceleration for roll and steer. The control task was defined as simple roll stabilization (i.e. track a roll angle of zero degrees), even though the data was collected during heading and roll tracking tasks.

He used a four step process for identifying the rider controller 1) he “removed” the human remnant by averaging the time histories over several individual perturbations, 2) he identified a high order finite impulse response model (only a function of previous inputs) for the lateral force to steer angle SISO pair (lateral perturbation force input and steer angle as output) 3) low pass filtered the resulting responses, and 4) he identified the rider controller parameters with a grey box model using the filtered FIR simulation results as the base data. The grey box model was parameterized with eight gains and a time delay. He was able to identify the gains, but the time delay always gave a resulting unstable model, so he dropped it. Furthermore, all of the gains were not necessary for good model predictions so he eliminated the unnecessary gains systematically to find the critical feedback elements. These turned out to be the gains for roll angle, roll rate, steer rate, and the integral of the steer angle. The first three are as one may expect and he concludes that the steer angle integral could be equated to yaw angle feedback since they are proportional in the linear sense.

Peter’s approach hinges on the averaging process in step one. The human remnant is large relative to the measurements and averaging potentially removes data that isn’t necessarily noise. This averaging is atypical, as process noise models are usually employed to account for these variations in the data. Using a model such as ARMAX instead of the two step averaging and FIR model would potentially allow one to identify the underlying linear model without removing potentially valid data in the time history averaging process. Or all of the steps could be combined into a state space grey box formulation with a process noise model, for a more direct route to identifying the free parameters. But these methods have their difficulties and will be described later in the Chapter.

Conclusion¶

The literature provides many examples of first principle models for both the open loop vehicle-rider system dynamics and the rider’s control, but often proving that those models are good predictors of real physical phenomena is difficult. The previous examples presented above have various similarities that influence the methods I’ve chosen to use to identify the vehicle and the rider.

- Open loop identification

The purpose of the open loop identification is to identify the passive vehicle and rider dynamics. This includes the force and kinematic relationships of the bicycle or motorcycle and, if a rider is present, the passive dynamics of the rider’s body motion. Their are two basic approaches that have been used in literature.

- Mode Excitation

- This involves identifying particular modes of motion by forcing the system such that those modes are excited. The input to the vehicle is typically limited to a narrow bandwidth. The forcing can be generated manually from human control, by external perturbations, or as a result of the maneuver. The techniques are best at identifying sustained oscillatory modes. Decaying oscillations are fit to the data to extract time constant, frequency, and phase shift for various input-output combinations. These techniques generally give good repeatable results, but are limited to identifying single modes and require many experiments to get a spread in frequency content and vehicle speed. These methods are also limited to identifying open loop dynamics.

- Excitation

- Many modes of a model can be simultaneously excited if proper inputs to the vehicle are chosen, giving the opportunity to identify more complete dynamic models. Frequency sweeps, white noise, and sum of sines are good candidates for a broad input spectrum. And it turns out that the remnant associated with human control and or deliberate random manual excitation can provide a wide bandwidth input spectrum as shown in [Eat73b] and [Jam02] for adequate system identification of many modes including the higher frequency wobble mode. Modern system identification techniques can be used to find models and identify physical parameters.

- Rider Control Identification (closed loop, active)

Few have attempted to identify the rider as a control element in the bicycle or motorcycle system. The large array of potential control actions from a unconstrained rider is extremely difficult to measure, especially when both the forces and kinematics are keys to proper identification. Typically limits are put on how the rider can actuate the system and in some cases limits are put on the rider’s ability to sense the system. This is somewhat critical so that the system is much more tractable. Similar to the open loop excitation techniques, a broad frequency spectrum provides better data to work for identification purposes. [DL11] has a good overview of excitation ideas.

The open loop dynamics are in some sense much easier to model with first principles, as the theory is much more mature. On the other hand, the theoretical constructs of the control system of the human is relatively in its infancy, so having the advantage of strongly validated first principles is much weaker than say in the field of mechanics. Most researchers’ approaches have been modeled from the manual control work lead by authors such as Tustin and McRuer in the 50’s and 60’s. When mapped to the bicycle, the primary control loop is taken as roll stabilization and roll command authority, with the secondary loops being heading and tracking. Both sequential loop controller designs and the popular PID controllers have been used as a structure for gain and delay parameter identification in the control loops.

Accurate parameter identification relies on accurate characterization of the system process noise and in the case of a human rider, the process noise is often comparable in magnitude and frequency to the control actions themselves. Techniques that treat the controller as a quasi-linear structure, in which the noise is modeled as white and Gaussian and characterized by the portion of the output not linearly correlated to the input (i.e. remnant), have been popular in the past. [Eat73b] took care to account for this and found that the crossover model was a good predictor of human control action. A proper treatment of the noise by other researchers is typically little to none and justly so as it is not necessarily easy to deal with small signal-to-noise ratios in the linear control framework. Modern system identification techniques offer some ability to model process noise with ARMAX types of implementations and state space formulations benefiting from the integration with Kalman filters. As will be discussed in the following sections, model identification works fairly well but parameter identification such as those for control gains becomes increasingly difficult with larger noise.

Experimental Design¶

Our main experimental designs were focused around reasonable ways to excite the rider/bicycle system with the goal of identifying the parameters of the rider control system. I started by simply repeating some of the perturbation experiments from Chapters Delft Instrumented Bicycle and Motion Capture, but included and measured the lateral perturbation force and the steer torque which were critical measurements for realistic input/output relationships that the previous studies lacked. We also attempted single lane change maneuvers because we’d been using a lane change as our objective criterion in our simulations [HMH12] and they had been used successfully used in the literature. It turned out that we were able to get reasonable results with preliminary system identification with the lateral perturbation runs and did not pursue the lane change maneuvers beyond the preliminary efforts. The lane changes were especially difficult on the narrow treadmill.

Riders¶

We chose three riders: Charlie, Jason, and Luke of similar age: 34, 28-29, 32, mass: 79, 84, 84 kg and bicycling ability although Luke has more technical mountain biking skill than the other two riders. A wide range of skill levels were outside the scope of the project and we preferred riders with good proficiency as it has been shown that it increases repeatability of results in tasks such as these [WZT79]. The seat height and harness were set in the same position for Charlie and Luke and in a different position for Jason. The inertia of the rear frame was measured for both configurations (thus the “Rigidcl” and “Rigid” bicycles) in Chapter Physical Parameters.

Environments¶

We performed the experiments in two different environments: on a treadmill and in a large gymnasium.

Treadmill¶

Dr. James Jones at the veterinary school at here at Davis graciously let us use their horse treadmill (Graber Ag Kagra Mustang 2200) during their downtime, Figure 11.1. The treadmill is 1 meter wider and 5 meters long and has a speed range from 0.5 m/s to 17 m/s. This was only a third of the width treadmill at Vrije Universiteit in Amsterdam, but after some practice runs we felt that narrow lane changes and the lateral perturbations could be successfully performed. We used the treadmill because the environment was very controllable, in particular with regard to fixed constant speeds, and it offered the ability to do very long run durations within a broad speed range. Potentially both the side railings and the belt side curbs added to rider’s lack of lateral movement space and changed the riders’ control strategy.

Pavilion¶

The bicycle was designed in such a way that all of the data collection equipment was on board and was suitable for data collection in a free environment. After lengthy bureaucratic negotiations, we were able to make use of the UCD pavilion floor for the experiments, Figure 11.2. The floor was made of a stiff rubber[3] and provided a rectangular, wind-free space of about 100’ by 180’ (30 m by 55 m). We rode around the perimeter to build up speed and did our maneuvers on a straight section about 100 feet (30 m) long. We were not able to travel at speeds higher than about 7 m/s as the tires would slip in the final turn into the test section (this seemed to be due to the dust on the floor). This indoor environment provided a wind free area which was more akin to the environment bicyclists normally ride in than did the treadmill.

Maneuvers¶

Our choice of maneuvers was primarily guided by our previous experiments and the search for an optimal way to externally excite the system. We also made sure to perform sets of experiments that would act as a control without deliberate disturbances. The following list details the meaning of the maneuver labels in the dataset.

- System Test

- This is a generic label for data collected during various system tests that should not be used for general analysis. This was primarily used to check that all sensors were working before each set of experiments.

- Balance

- The rider is instructed to simply balance the bicycle and keep a relatively constant heading. They were instructed to focus on a point of their choosing in the far distance. There was an open door in front of the treadmill which allowed the rider to look to a point outside across the street. In the pavilion, the rider looked into the rafters of the building or at the furthest wall. We may have given slightly different instructions to the riders and Charlie did not understand the instructions exactly during some of the earlier runs, but nonetheless these can be analyzed with a control model that only has the roll and heading loops closed and maybe even with only the roll loop closed. We had a line taped to the pavilion floor during these runs that was still in the periphery of the rider’s vision. This may have affected their heading control even though they were instructed to ignore it.

- Balance With Disturbance

- Same as ‘Balance’ except that a lateral force perturbation is applied just under the seat of the bicycle. The rider wore a face shield on the side of the perturber so no visual cues were available to predict the perturbation time or direction. On the treadmill, we sample for 60 to 90 seconds with five to eleven perturbations per run. On the pavilion floor we were able to apply only two to four perturbations per run due to the length of the track. In the early runs (< 204), the lateral force was applied only in the negative direction (to the left) and the perturber decided when to apply the perturbations. For the later runs (> 203), we applied a random sequence of positive and negative perturbations that was unknown to the rider. On the treadmill, the rider signaled when they felt stable and the perturbation was applied at a random time between 0 and 1 second based on a simple computer program. On the pavilion floor, we simply applied the perturbations randomly but as soon as the rider felt stable so that we could get in as many as possible during each run.

- Track Straight Line

- The rider was instructed to focus on a straight line that was marked on the ground and he attempted to keep the front wheel on the line. The line of sight from the rider’s eyes to the line on the ground was essentially tangent the top of the front wheel. In the pavilion, the line could be seen up to 100 feet ahead, so there was greater peripheral view of the line. On the treadmill, there was from 0.5 to 1.5 meters of preview line available.

- Track Straight Line With Disturbance

- Same as “Track Straight Line” except that a lateral perturbation force is applied to the seat of the bicycle. This was done in the same fashion as described in “Balance With Disturbance”.

- Lane Change

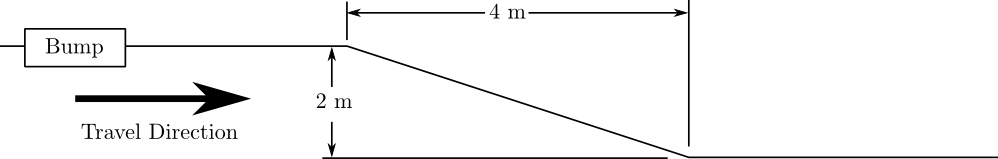

- The rider attempted to track a line in similar fashion as the “Track Straight Line” maneuver except that the line was a single lane change. On the pavilion floor, the line was taped on the ground and the rider was instructed to do whatever felt best to stay on the line Figure 11.3. They could use full preview looking ahead, focus on the front wheel and line, or a combination of both. We also tried some lane changes on the treadmill but the lack of preview of the line made it especially difficult. We were able to manage it by marking a count down on the belt so that the rider new when the lane change would arrive. The rider also knew the direction of lane change beforehand for all the scenarios.

- Blind With Disturbance

- We did a run or two for each rider on the pavilion floor with the rider’s eyes closed to attempt to completely open the heading loop. In hindsight, blind tests would be preferable when identifying the rider control system so that only inner roll stabilization loop need be analyzed.

- Static Calibration

- We took a short duration sample of the sensors’ signals while no rider was on the bicycle and the bicycle was fixed as close as possible to vertical in roll before each set of runs. The static accelerometer readings could theoretically give the roll and pitch angles of the bicycle frame and be used to account for the bias in the roll angle measurements.

I only focus on the Balance and Track Straight Line maneuvers with and without disturbances in the following analyses and they will be referred to as Heading Tracking and Lateral Deviation Tracking in the text (as opposed to the labels in the database).

- Heading Tracking

- The rider was instructed to simply balance the bicycle and keep a relatively constant heading while focusing their vision at a point in the far distance.

- Lateral Deviation Tracking

- The rider was instructed to focus on a straight line that was marked on the ground and to attempt to keep the front wheel on the line.

Both tasks were performed with and without the application of a manually applied lateral perturbation force just below the seat. The forces were applied randomly in direction and time.

Data¶

The experimental data was collected on seven different days. The first few days were mostly trials to test the equipment, procedures and different maneuvers. The data from the trial days is valid data and we ended up using it in our analysis. The tires were pumped to 100 psi at the start of each day.

- February 4 2011 Runs 103-109

- These were the first trials on the treadmill for preliminary testing. Only Jason rode. We performed lateral deviation tracking with disturbances. The bike fell over, broke and we had to cut it short.

- February 28, 2011 Run 115-170

- These were the first trials in the pavilion. Jason was the only rider. We tried lane changes (115-139), lateral deviation tracking with disturbances (140-157), and a mixture of heading tracking and lateral deviation tracking with no disturbances (158-170). I noted that the slip clutch backlash seemed to be larger than the previous day with a guess of about 1 degree.

- March 9, 2011 Runs 180-204

- This was the second go at the treadmill, still just testing things. Jason was the only rider. We did heading and lateral deviation tracking with disturbances and some lane changes. The lane changes were 0.25 m wide left and right maneuvers back and forth among two lines on the treadmill at 2 m long segments. Countdown markers to give an idea when the lane change started were necessary due to the rider’s limited preview distance. We did the highest speed during any subsequent trials at 9 m/s. The 9 m/s runs generated a large amount of noise in the lateral force channel. The treadmill elevation was set at 0.1% upwards incline (because it was stuck).

- August 30, 2011 Runs 235-291

- Jason and Luke rode and performed heading and lateral deviation tasks with and without perturbations at three speeds on the treadmill.

- September 6, 2011 Runs 295-318

- Charlie performed heading and lateral deviation tasks with and without perturbations on the treadmill.

- September 9, 2011 Runs 325-536

- Luke, Charlie and Jason performed heading and lateral deviation tracking tasks on the Pavilion floor with and without perturbations. Most of Luke and Charlie’s runs were corrupt due to the time synchronization issues.

- September 21, 2011 Runs 538-706

- Luke and Charlie repeated the runs from September 9th. And we added a couple of blind runs for each of them.

The meta data and raw time history data for each run and all sensor calibration data were stored in individual Matlab mat files on the data acquisition computer using the BicycleDAQ software. The run files and calibration files are automatically numbered in sequence with a five digit number; one sequence for runs and one for calibrations. These mat files were then parsed and merged into a uniform, organized, and complete single HDF5 database that could be accessed by a number of programs and languages for fast data queries. I made use of PyTables for writing and reading from the database. The software BicycleDataProcessor was designed as an interface to the data in the database. In particular, it is able to load the raw data from individual runs, process it, and present it for easy manipulation and viewing.

The database is initially structured with three top-level tables and nodes containing the time histories of the sensors for each run. The run table has a row for each run and the columns store each piece of meta data, including the corruption coding described below. The signal table has a row for each raw and processed signal type and the classification information for each. The calibration table has a row for each calibration which provides information about the sensor and the data collected in the calibration.

We recorded a large set of meta data for each run to help with parsing during analyses. We also video recorded all of the runs (minus a few video mishaps). I coded each run based on the notes, data quality, and viewing the video for potential or definite corrupted data with the following five codes.

- Corrupt

- If the data is completely unusable due to time synchronization issues or others then this is set to true.

- Warning

- Runs with a warning flag are questionable and potentially not usable.

- Knee

- The rider’s knees would sometimes de-clip from the frame during a perturbation. This potentially invalidates the rigid rider assumption. An array of 15 boolean values, one for each perturbation in the run, are stored for each run and each true value in the array represents an individual perturbation where a knee disengaged with the bicycle.

- Handlebar

- On the treadmill the bicycle handlebars occasionally contacted the side railings. Each perturbation during the run in which this happened was recorded.

- Trailer

- On the treadmill the roll trailer occasionally contacted the side of the treadmill. Each perturbation during the run which this happened was recorded.

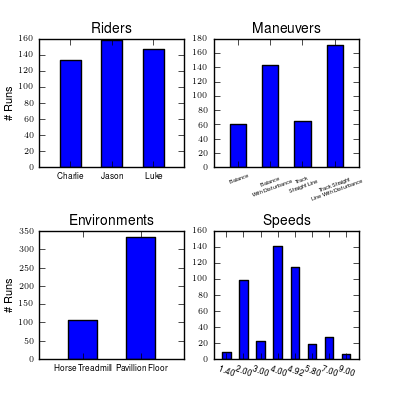

We ultimately collected 600+ runs that were potentially usable for analysis. Figure 11.4 gives a breakdown of the runs by rider, environment, maneuvers, and speed bins.

Four bar charts showing the number of runs that are potentially usable for model identification. These include runs from the treadmill and pavilion, one of the four primary maneuvers, and were not corrupt. Generated by src/systemidentification/data_histograms/py.

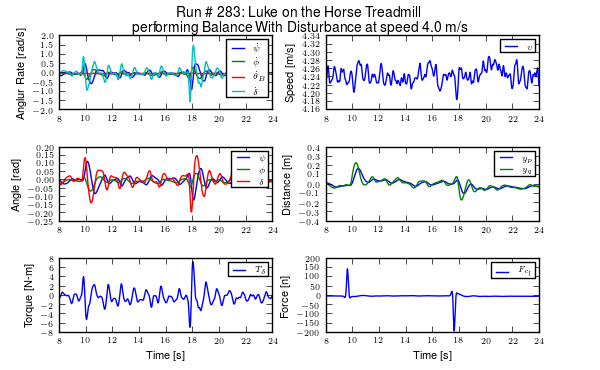

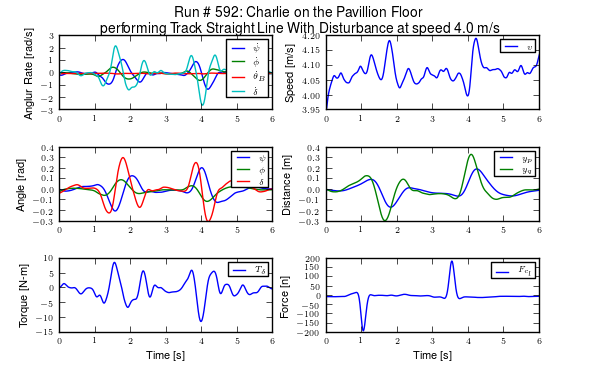

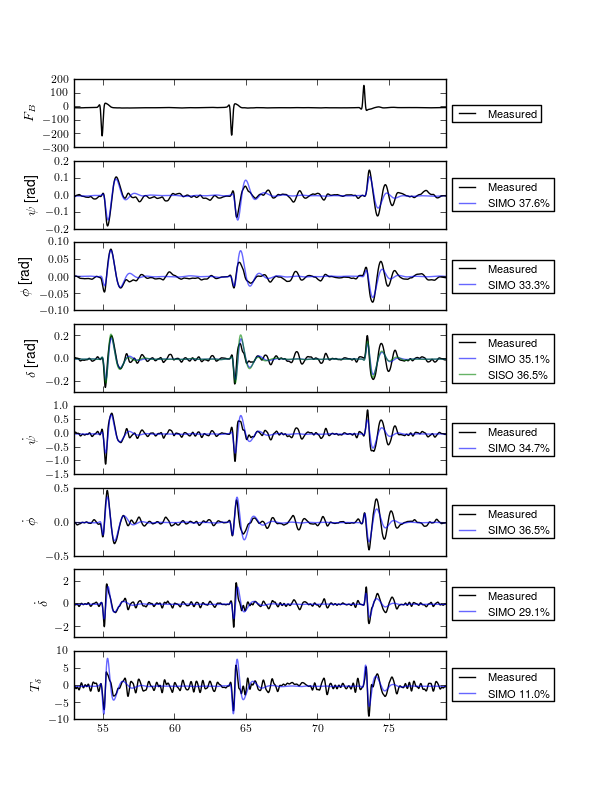

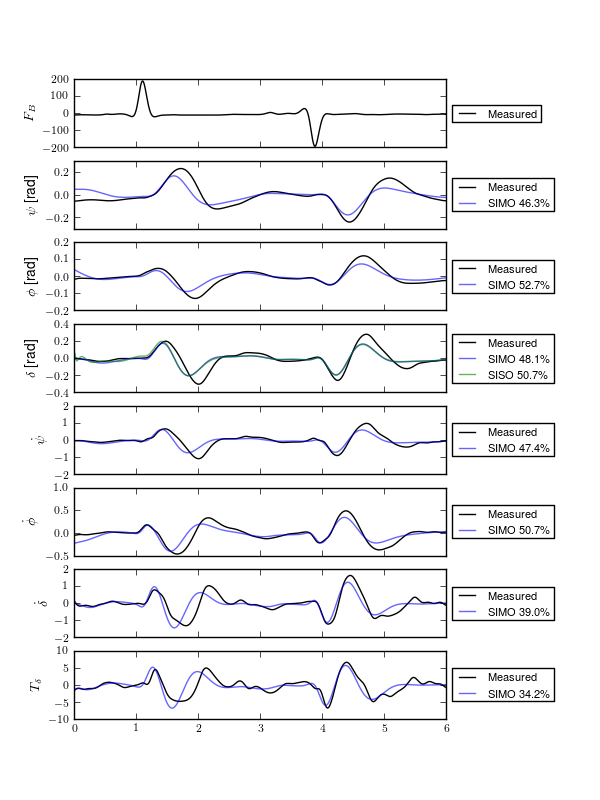

The processed data provides filtered signals that correspond to the coordinates and speeds outlined in our models, Chapters Bicycle Equations Of Motion and Extensions of the Whipple Model. We were even able to estimate the path of the wheel contact points on the ground. The quality of the data is high with little to no missing data and complete descriptions of the dynamic state through time. Figures 11.5 and 11.6 give examples of the processed data for the two environments.

System Identification¶

My primary goal in the following analyses of all the collected data is to identify the manual control system employed the rider. I will approach this in a similar fashion to [Eat73b] and attempt to identify the plant, i.e. the open loop bicycle and rider dynamics, first followed by an identification of the control system. The question arises as to what the plant and controller consist of. In this case, I consider the plant to include the passive or open loop model of the bicycle combined with the rider’s biomechanics and the controller to be the some makeup of the human brain which takes sensory inputs, has time delays, and sends outputs for muscular control.

This two part process was not originally thought to be needed and I started with the identification of the control system assuming the Whipple model would be adequate for the open loop dynamics. But my preliminary attempts at identifying the controller with the Whipple model in place showed that the plant always under-predicted the steer torque needed for a given measured trajectory. This lead me into the exploration of the validity of the Whipple model.

There is actually very little experimental validation of the open loop dynamics of the bicycle, with [Koo06] being one of the better studies. But his study was limited to a riderless bicycle in a narrow speed range where the bicycle was stable. Taking the validity of first principles models like this for granted can potentially lead to inaccurate conclusions. In our case, it resulted in erroneous early estimations of the controller parameters. As pointed out by many, in particular the motorcycle researchers, there is very good reason to question some of assumptions. The main questionable assumption being knife-edge no side-slip wheels, especially when under a rider’s weight. And secondly, the rider’s biomechanics have much more influence and coupling to the bicycle than the motorcycle, which must be accounted for.

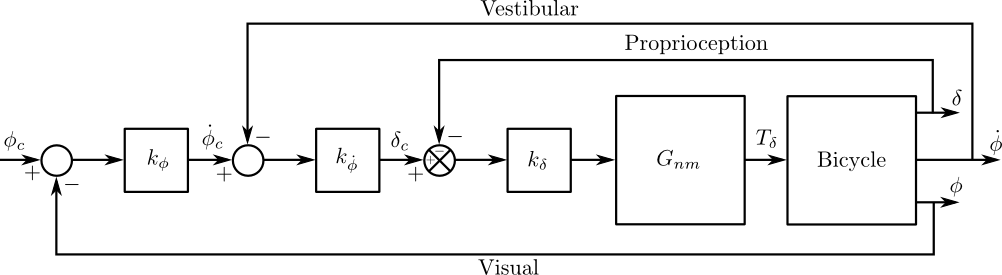

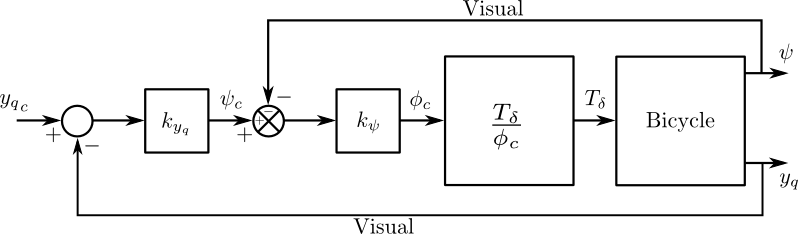

After a model for the open loop system is derived I identify parameters in the control structure described in [HMH12] and in Chapter Control. We’ve shown that this control structure is robust for a range of speeds and lends itself to the dictates of the crossover model which is built upon strong experimental evidence in human operator modeling. I make use of multi-input multi-output grey box state space identification techniques to arrive at the optimal parameters for the measured data.

Before I proceed, it is important to emphasize the difference in identifying a model that best predicts the data and identifying physical parameters in a model structure that cause the predictions to best fit the measured data. In the first case, it is somewhat easy to fit a model to input and output data. By increasing the order of the model and thus the number of free parameters one can theoretically fit every data point. This is most evident in the over-fitting of a linear trend with that of a higher order polynomial. It still often takes human intuition and reasoning to limit the order of the system to something that represents the true relationships between the variables. But even in this case, the individual meaning of the resulting identified parameters of a black box system may have little apparent connection to the known first principles laws we are familiar with and trust. In dynamics, we often want to know how well our first principles models predict the measured motion and secondly we’d like the ability to identify parameters, particularly ones we are uncertain of in the first principles models, from the measured data. Accurately identifying model parameters is much more difficult task, because noises, both process and measurement, have to be accounted for to get repeatable and accurate estimates of the parameters. I have had good success with finding models that predict the data but little success with explicit and accurate parameter identification in the following analyses. There is great room for improvement in the parameter identification if the noise issues are better managed.

Bicycle Model Validity¶

The open loop dynamics of the bicycle-rider system can be described using many models, see [ASKL05], [LS06], and [MPRS07] for good overviews. The benchmarked Whipple model [MPRS07] provides a somewhat minimalistic model in a manageable analytic framework which is capable of describing the essential dynamics such as speed dependent stability, steer and roll coupling, and non-minimum phase behavior. I use this model as the standard base model to work with, as the fidelity of simpler models are generally not adequate. The model is 4th order with roll angle, steer angle, roll rate and steer rate typically selected as the independent states and with roll and steer torque as inputs. I neglect the roll torque input and in its place extend the model to include a lateral force acting at a point on the frame to provide a new input, accurately modelling imposed lateral perturbations (see Chapter Extensions of the Whipple Model for the details). I also examine a second candidate model which adds inertial effects of the rider’s arms to the Whipple model, also in Chapter Extensions of the Whipple Model. This model was designed to more accurately account for the fact that the riders were free to move their arms with the front frame of the bicycle. This model is similar in fashion to the upright rider in [SK10a], but with slightly different joint definitions. Constraints are chosen so that no additional degrees of freedom are added, keeping the system both tractable and comparable to the benchmarked Whipple model.

I make the assumptions that the model is (1) linear and (2) has two degrees of freedom. The best model for a given set of data is constrained by those two assumptions. The implications of this is that even if the model predicts the outputs from the measured inputs it may not reflect realistic parameter values in a first principles sense because all real systems have infinite order. Secondly, the identified model may not map to the assumption we make in first principles derivations about things such as joints, friction, inertia, etc. There may exist higher order models which both fit the output data well and better map parameter values to first principle constructs. For example, a bicycle model with side slip at each wheel will be sixth order and if the regression to find the best model has extra degrees of freedom in the two additional equations, the optimal solution may be such that the numerical values of the equation coefficients map more closely to the first principle parameters. For example, it may be possible to make a fourth order model behave similarly to a sixth order model with “correct” first principles parameters by choosing unrealistic parameter values. But if the primary goal is the control identification, rather than understanding the quality of our first principles derivations, the model of lowest order that still fits the data well is completely suited for the task.

I estimated the physical parameters of the first principles models with the techniques described in Chapter Physical Parameters. The bicycle was measured to get accurate estimates of the parameters used in the benchmark bicycle. Each rider’s inertial properties were estimated using Yeadon’s [Yea90b] method which allowed easy extraction of body segment parameters for more complicated rider biomechanic models such as the inclusion of moving arms as described above. The parameter computation is handled with two custom open source software packages [Dem11] and [MKSH11].

State Space Realization¶

During all of the experiments there are two measured external (or exogenous) inputs: the steer torque and the lateral force. Both inputs are generated manually, the first from the rider and the second from the person applying the pulsive perturbation. The outputs can be any subset of the measured kinematical variables or combinations thereof. The problem can then be formulated as follows: given the inputs and outputs of the system and some system structure, what model parameters give the best prediction of the output given the measured input. This a classic system identification problem.

Method¶

For this analysis, I limit the inputs to steer torque and lateral force and the outputs to roll angle, steer angle, roll rate, and steer rate. The ideal fourth order system can be described with the following continuous state space description

where \(\eta(t)\) are the outputs and \(\mathbf{H}\) is the identity matrix.

Assuming that this model structure can adequately capture the dynamics of interest of the bicycle-rider system, our goal is to accurately identify the unknown parameters \(\theta\) which are made up of the unspecified entries in the \(\mathbf{F}\) and \(\mathbf{G}\) matrices. To do this one needs to recognize that this continuous formulation is not compatible with noisy discrete data. The following difference equation can be assumed if we sample the continuous system at \(t=kT\), \(k=1,2,\dots\), with \(T\) being the sample period and making the assumption that the variables are constant over the sample period (i.e. zero order hold).

The additional terms \(w\) and \(v\) represent the process and measurement noise vectors, respectively, which are assumed to be sequences of white Gaussian noise with zero mean and some covariance. By making use of the Kalman filter, Equation (2) can be transformed such that the optimal estimate of the states, \(\hat{x}\), with respect to the process and measurement noise covariance are utilized, see [Lju98].

where \(\mathbf{K}\) is the Kalman gain matrix. \(\mathbf{K}\) is a function of \(\mathbf{A}(\theta)\), \(\mathbf{C}(\theta)\) and the covariance and cross covariance of the process and measurement noises, but it can also be directly parameterized by \(\theta\). With that, this equation is called the directly parameterized innovations form [Lju98] and the entries of the four matrices in equation (3) can be estimated directly.

The \(\mathbf{A}\) and \(\mathbf{B}\) matrices are related to \(\mathbf{F}\) and \(\mathbf{G}\) by

where \(T\) is the sampling period. With a linear assumption \(\mathbf{A}\) and \(\mathbf{B}\) can even be directly estimated in discrete form by

The one step ahead predictor for the innovations form is

where \(q\) is the forward shift operator (\(q u(t) = u(t+1)\)) [Lju98]. The predictor is a vector of length \(p\) where each entry is a ratio of polynomials in \(q\). These are transfer functions in \(q\) from the previous inputs and outputs to the current output. In general, the coefficients of \(q\) are non-linear functions of the parameters \(\theta\).

We can now construct the cost function, which will enable the computation of the parameters which give the best fit using optimization methods. We’d like to minimize the error in the predicted output with respect to the measured output at each time step. First form \(Y_N\) which is a \(pN \times 1\) vector containing all of the current outputs at time \(kT\).

where \(p\) is the number of outputs and \(N\) is the number of samples. Then organize the predictor vector, \(\hat{Y}_N(\theta)\), the one step ahead prediction of \(Y_N\) given \(y(s)\) and \(u(s)\) where \(s \leq t - 1\)

The cost function is then the norm of the difference of \(Y_N\) and \(\hat{Y}_N(\theta)\) for all \(k\).

The value of \(\theta\) which minimizes the cost function is the best prediction

where \(Z^N\) is the set of all the measured inputs and outputs.

In general, the minimization problem is not trivial and may be susceptible to many of the issues associated with optimization including local minima. The number of unknown parameters in the \(\mathbf{K}\) matrix is a function of the number of states and the number of outputs, in our case in \(\mathbf{R}^{4\times4}\) which more than doubles the number of unknowns present in the \(\mathbf{A}\) and \(\mathbf{B}\) matrices. It is thus critical to reduce the number of unknown parameters to have a higher chance of finding the global minimum of the cost function. The accuracy of the system parameters depends on the ability to estimate the \(\mathbf{K}\) matrix along with the other parameters.

Before identification I further processed all of the signals that were generally symmetric about zero by subtracting the means over time. For some of the pavilion runs, this may have actually introduced a small bias, as the short duration runs with unbalanced perturbations may not have zero mean.

I made use of the Matlab System Identification Toolbox for the identification of the parameters \(\theta\) in each run of this model structure. In particular, a structured idss object was built with the initial guesses of the unknown parameters based on the Whipple model and the initial guesses for the initial conditions and the Kalman gain matrix being equal to zero. All of my attempts at identifying the Kalman gain matrix were plagued by local minima.

Results¶

It turns out that finding a model that meets the criterion is not too difficult when the output error form is considered (\(\mathbf{K}=0\)). This model may be able to explain the data well, but the parameter estimation is potentially poor because the parameters in the state and input matrices are adjusted such that the results fit both the true trajectories and the noise. Global minima in the search routine are quickly found when the number of parameters is between 10 and 14. When the \(\mathbf{K}\) matrix is added the number of unknown parameters increases by 16 and the global minimum becomes more difficult to find and I was rarely able, if ever, to find the global minimum for the general problem, even when reducing the number of outputs to one.

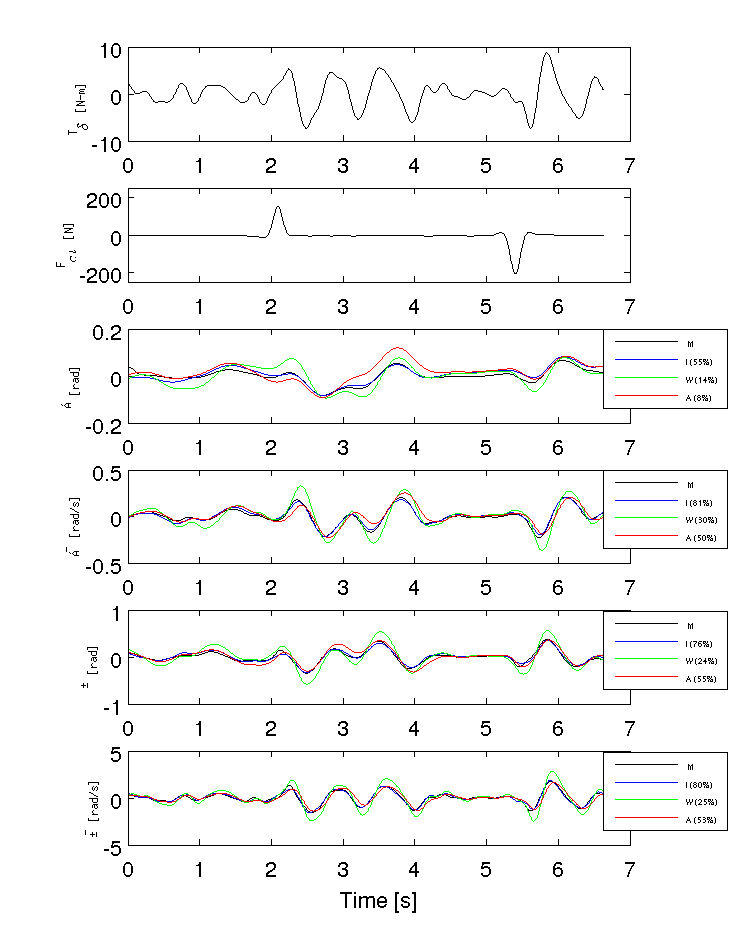

Figure 11.7 shows typical example input and output data for a single run (#596) with both steer torque and lateral force as inputs. The plot compares the simulation response of the input to the measured response. Notice that the identified model predicts the trajectory extremely well. Similar results are found for the majority of the runs. The Whipple model predicts the trajectory directions but the magnitudes are large, meaning that for a given trajectory, the Whipple model requires less torque than that measured. The Whipple model with the arm inertial effects does a better job than the basic Whipple model, but still has some magnitude differences with respect to the identified model. It also has a harder time predicting the roll angle than both the identified model and the Whipple model.

Example results for the identification of a single run (#596). The experimentally measured steer torque and lateral force are shown in the top two graphs. All of the signals were filtered with a 2nd order 15 hertz low pass Butterworth filter. The remaining four graphs show the simulation results for the Whipple model (W), Whipple model with the arm inertia (A), and the identified model for that run (I) plotted with the measured data (M). The percentages give the percent of variance explained by each model.

The identified models are almost always unstable due to the high weave critical speed and, even though the measured inputs stabilize the true system, they will not necessarily stabilize the models. This poses an issue when gauging the model quality by the percentage variance of the output data explained by the model. A model that blows up during the simulation may not necessarily be a bad model, but it will return a very small percent variance and lose its ability to be compared by that criterion. [BBCL03] and [TJ10] both are able to run feed-forward simulations of their motorcycle models with the measured steering torque. They both are dealing with high speed motorcycles which typically only have a slightly unstable capsize mode. [TJ10] uses a controller to compensate the torque for unbounded errors so that the simulation doesn’t blow up. The method I use here is to choose short duration portions of the runs for simulation and search for the best set of initial conditions to keep the model stable during the duration. This generally works but there are ultimately some incomparable runs due to this issue.

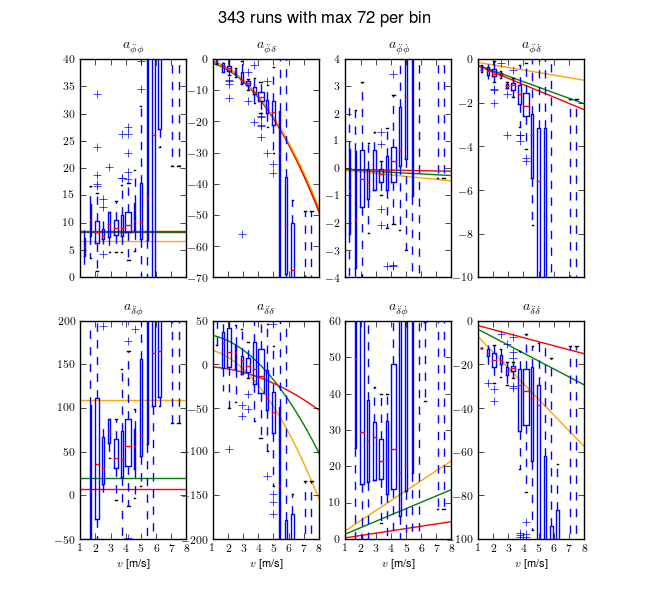

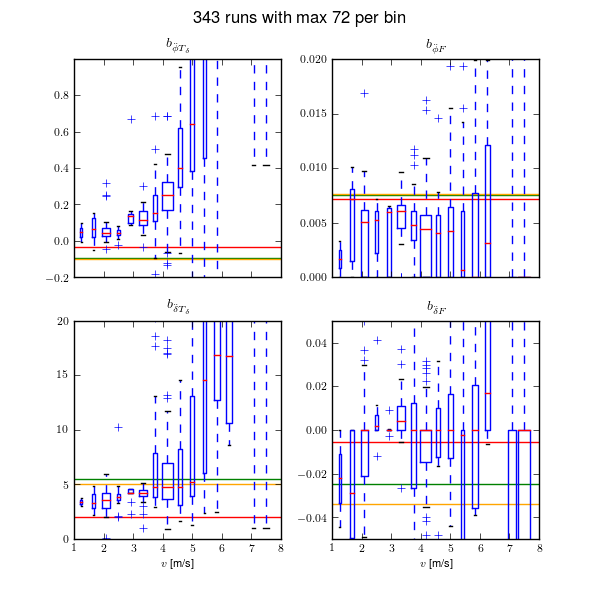

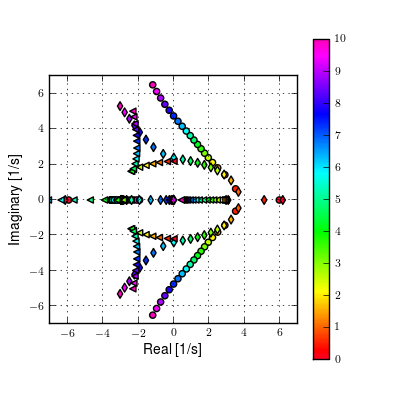

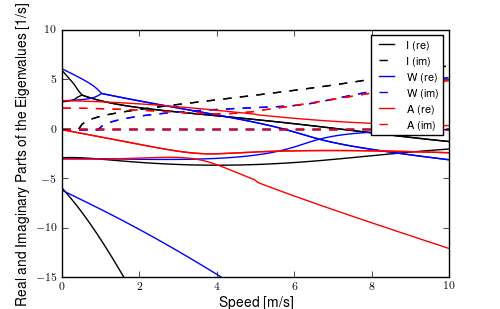

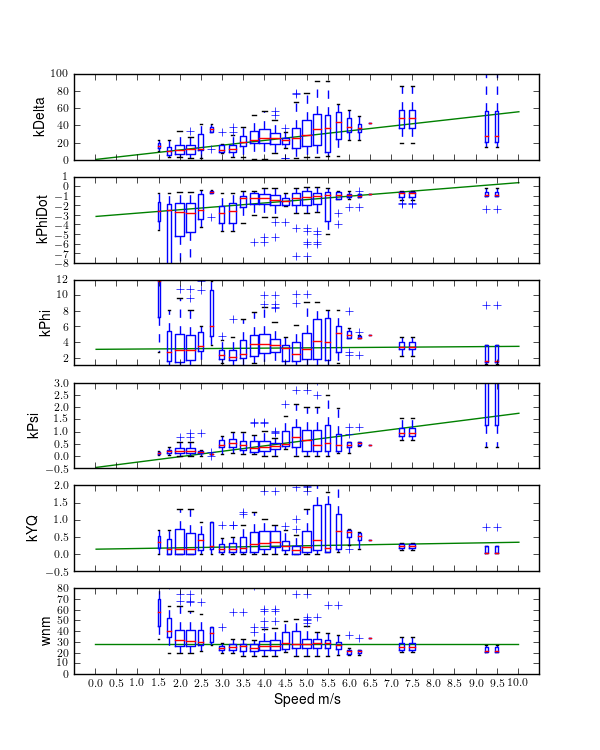

I use this structured state space output error identification procedure for a collection of experiments (\(n=368\)) over a range of speeds between about 1 and 9 m/s. Figures 11.8 and 11.9 plot the identified coefficients of the dynamical equations of motion (i.e. the bottom two rows of the \(\mathbf{F}\) and \(\mathbf{G}\) matrices) as a function of speed for all of the experiments using box plots. Both the Whipple (green) and arm (red) model predictions are superimposed for comparison. The first notable thing is that the coefficients seem to generally have large variance, especially as the speed increases. Secondly, the roll acceleration, \(\ddot{\phi}\), equation seems to be better predicted by the two models and the data has less spread at the lower speeds, barring the \(\dot{\phi}\) coefficient which has large spread and no apparent relationship with speed for both equations. The roll equation also seems to have less spread in the experimental data. For example, the \(a_{\ddot{\phi}\delta}\) coefficient appears to be very tight and the first principles models predict it very well. The constant, linear, and quadratic trends in the coefficients are somewhat visible in the data but the variance in the coefficients clouds it. This variability in the coefficient predictions depends on many things including data quality, the ability to identify a process noise model, speed being constant during the run, choice of unknown coefficients, and more. With all of these improved, detailed regression models may be able to reveal the true trends[4]. Nonetheless, these graphs reveal several important things:

- The identified models predict the data well with most having mean predicted variance of the four outputs above 70% (but this is tightly coupled to run duration, i.e. longer runs have more room for model error).

- Some of the coefficients are well predicted by the Whipple model and can be fixed from first principles calculations, notably: \(a_{\ddot{\phi}\phi}\), \(a_{\ddot{\phi}\delta}\) and \(b_{\ddot{\delta}T_\delta}\) and maybe even \(a_{\ddot{\delta}\delta}\).

- The roll rate coefficients are highly variable with poor prediction by the models. Deficiencies in the first principles models are likely.

- Either the higher speed runs are outliers, or the behavior of the system changes more rapidly with speeds above 5 m/s or so.

- Some coefficients spread around zero giving inconsistent signs and others give opposite signs from those the first principles models expect.

State coefficients of the linear dynamical equations of motion plotted as a function of speed. Each box plot represents the distribution of that parameter for a small range of speeds, i.e. speed bin. The width of the box is proportional to the total duration of the runs in that speed bin. The green line is the Whipple model and the red line is the arm model. Only experiments with a mean fit percentage greater than zero are shown. The orange line is the model identified with the canonical method using runs done by Luke in the pavilion which is presented and discussed in the next section. Generated by src/systemidentification/coefficient_box_plot.py.

Input coefficients of the linear dynamical equations of motion plotted as a function of speed. Each box plot represents the distribution of that parameter for a small range of speeds, i.e. speed bin. The width of the box is proportional to the total duration of the runs in that speed bin. The green line is the Whipple model and the red line is the arm model. Only experiments with a mean fit percentage greater than zero are shown. The orange line is the model identified with the canonical method using runs done by Luke in the pavilion which is presented and discussed in the next section. Generated by src/systemidentification/coefficient_box_plot.py.

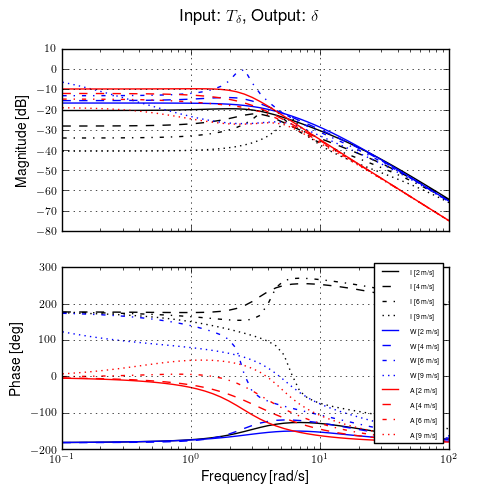

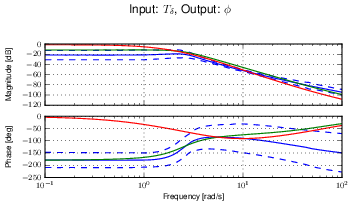

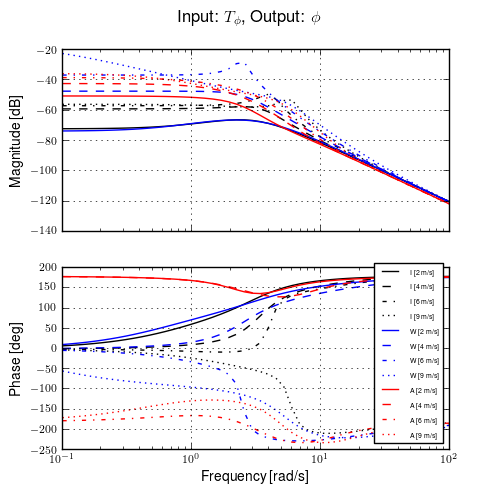

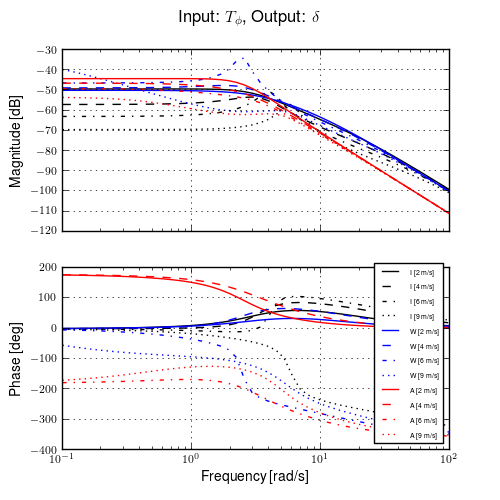

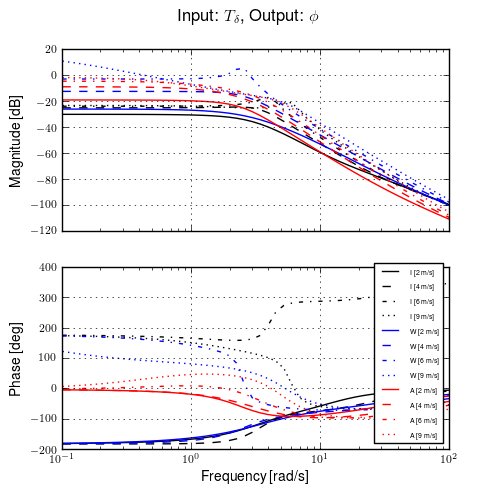

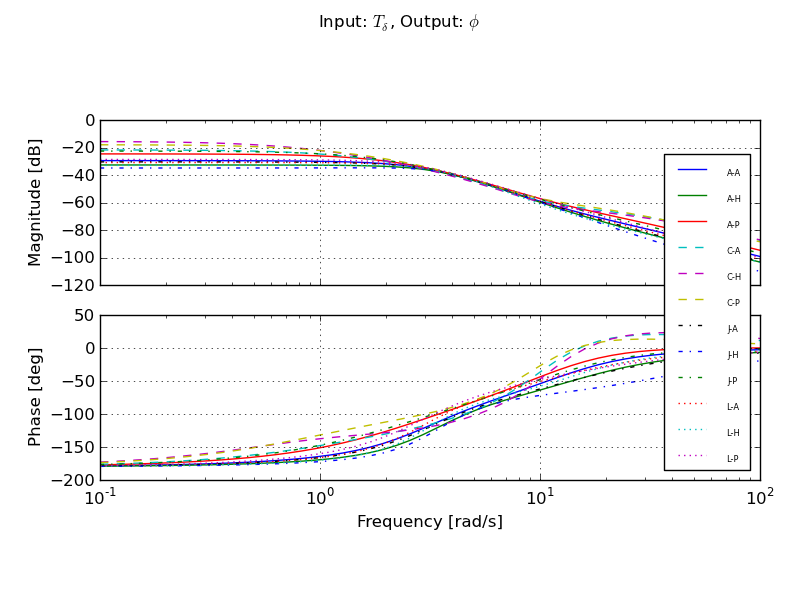

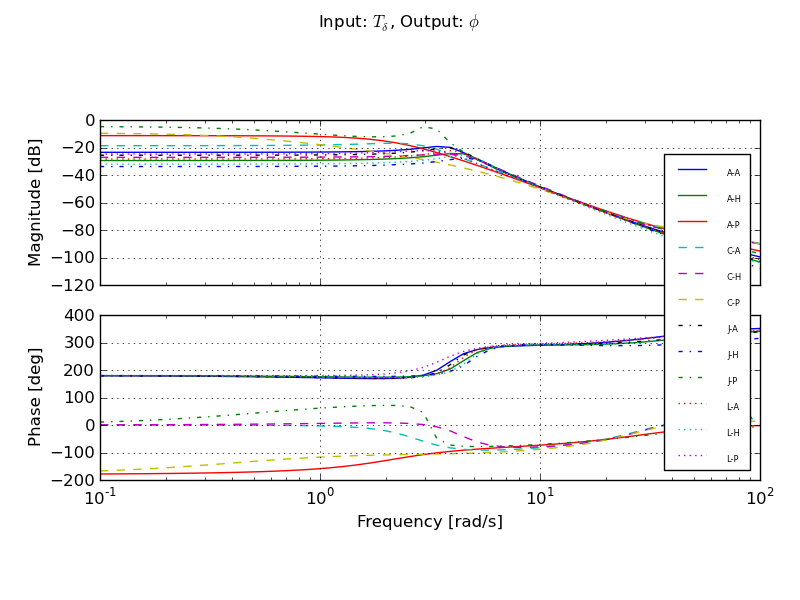

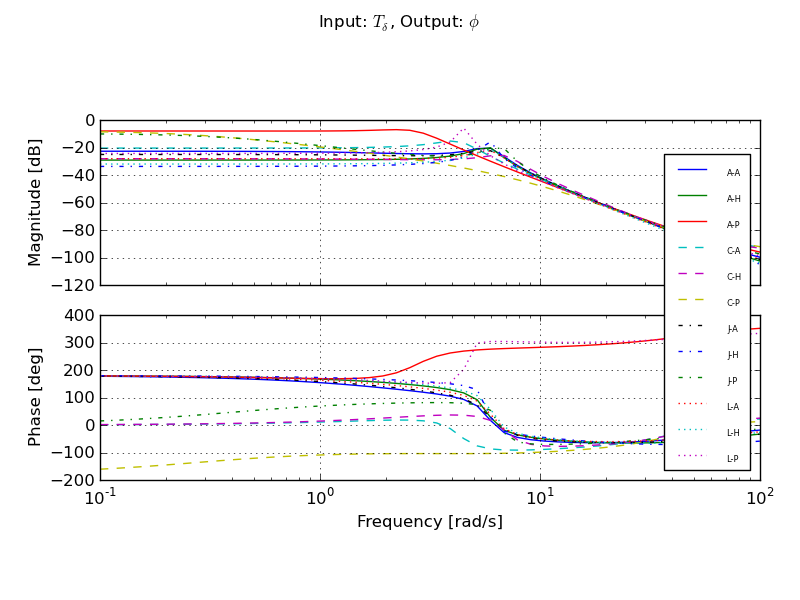

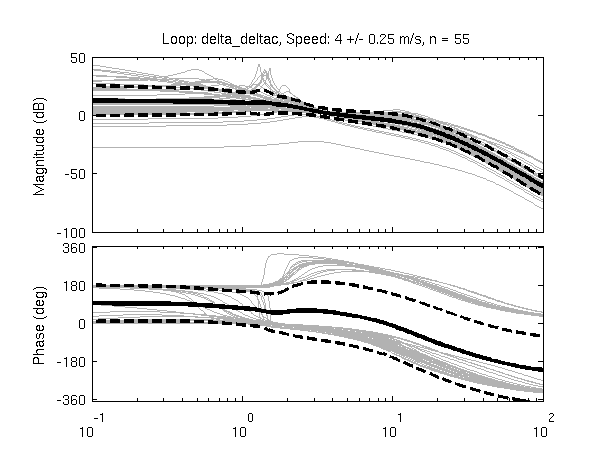

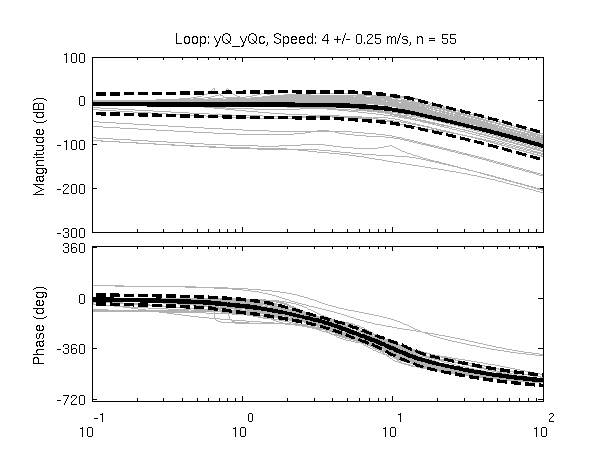

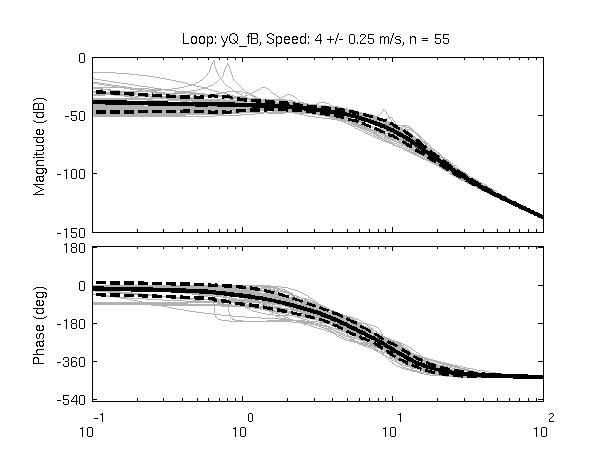

Figure 11.10 gives another view of the resulting data. It is a frequency response plot at the mean speed for a set of runs. The blue lines give the mean and one standard deviation bounds of the magnitude and phase of the system transfer function \(\frac{\phi}{T_\delta}(s)\) for the set of runs. Even though the spread in the identified parameters seems high in Figures 11.8 and 11.9, the Bode plot shows that the identified system frequency response is not as variable, especially in magnitude. It is also apparent that the experimental magnitude mean has a -5 to -10 dB offset across the frequency range shown with respect to the Whipple model, although the Whipple model does fall within one standard deviation of the mean. This correlates with the amplitude differences in the trajectories shown in Figure 11.7. Notice that the arm model has little to no offset between 2 and 10 rad/s, thus the better amplitude matching. The frequency response may give a better indication of the overall identified model quality.

Frequency response of steer torque to roll angle for a set of runs at \(4.0 \pm 0.3\) m/s. The solid blue line is the mean from the identified runs and is bounded by the standard deviation, the dotted blue lines. The green line is the Whipple model and the red line is for the model which accounted for the arm inertial effects.

Conclusion¶

I have shown that a fourth order structured state space model is both adequate for describing the motion of the bicycle under manual control in a speed range from approximately 1.5 m/s to 9 m/s. The fact that higher order models may not be necessary for bicycle dynamic description is an important finding. More robust models of single track vehicles are typically higher than 4th order, with degrees of freedom associated with tire slip, frame flexibilities, and rider biomechanics. These findings suggest that the more complex models may be overkill for many modeling purposes. The data subsequently also reveals that fourth order archetypal first principles models are not robust enough to fully describe the dynamics. The deficiencies are most likely due to un-modeled effects, with the knife-edge, no side-slip wheel contact assumptions being the most probable candidate. Un-modeled rider biomechanics such as passive arm stiffness and damping and head motion may play a role too. The uncertainty in the estimates of the physical parameters from Chapter Physical Parameters is not large enough to explain the difference between the identified coefficient identification and their predictions from first principles. It is likely that something as simple as a “static” tire scrub torque is needed to improve the fidelity of the first principles derivations, but that doesn’t preclude that the additional of a tire slip model might also improve the models.

Canonical Identification¶

One issue I faced with the state space realization was dealing with multiple experiments. Ideally I had hoped to identify a linear model that was a function of speed with respect to all or various subsets of the experiments. It is possible to concatenate runs, but discontinuities in the data potentially disrupt the identification. There is also the possibility of designing a cost function that gives the error in all the outputs across all of the runs simultaneously instead of on a per run basis. Both my recently obtained knowledge in system identification and the constraints of the methods available in the Matlab System Identification toolbox were limiting factors in these two approaches. But, Karl Åström suggested doing the system identification with respect to the second order form of the equations of motion. This would allow one to use both simple least squares for the solution and the ability to compute models from large sets of runs. This section deals with this approach.

Model structure¶

The identification of the linear dynamics of the bicycle can be formulated with respect to the benchmark canonical form realized in [MPRS07], Equation (11). If the time varying quantities in the equations are all known at each time step, the coefficients of the linear equations can be estimated given enough time steps.

where the time-varying states roll and steer are collected in the vector \(q = [\phi \quad \delta]^T\) and the time varying inputs roll torque and steer torque are collected in the vector \(T = [T_\phi \quad T_\delta]^T\). This equation assumes that the velocity is constant with respect to time as the model was linearized about a constant velocity equilibrium, but the velocity can also potentially be treated as a time varying parameter if the acceleration is negligible. I extend the equations to properly account for the lateral perturbation force, \(F\), which was the actual input we delivered during the experiments. It contributes to both the roll torque and steer torque equations.

where \(H = [H_{\phi F} \quad H_{\delta F}]^T\) is a vector describing the linear contribution of the lateral force to the roll and steer torque equations. \(H_{\phi F}\) is approximately the distance from the ground to the force application point. \(H_{\delta F}\) is a distance that is a function of the bicycle geometry (trail, wheelbase) and the longitudinal location of the force application point. For our normal geometry bicycles, including the one used in the experiments, \(H_{\delta F} << H_{\phi F}\). I estimate \(H\) for each rider/bicycle from geometrical measurements and the state space form of the linear equations of motion calculated in Chapter Extensions of the Whipple Model.

where \(x = [\phi \quad \delta \quad \dot{\phi} \quad \dot{\delta}]^T\) and \(u = [F \quad T_\phi \quad T_\delta]^T\). The state and input matrices can be partitioned.

where \(\mathbf{A}_l\) and \(\mathbf{A}_r\) are the 2 x 2 sub-matrices corresponding to the states and their derivatives, respectively. \(\mathbf{B}_F\) and \(\mathbf{B}_T\) are the 2 x 1 and 2 x 2 sub-matrices corresponding to the lateral force and the torques, respectively. The benchmark canonical form can now be written as

where

| Rider | \(H\) |

| Charlie | \([0.902 \quad 0.011]^T\) m |

| Jason | \([0.943 \quad 0.011]^T\) m |

| Luke | \([0.902 \quad 0.011]^T\) m |

The location of the lateral force application point is the same for Charlie and Luke because they used the same seat height. The force was applied just below the seat, which was adjustable in height for different riders.

Data processing¶

Chapter Davis Instrumented Bicycle details how each of the signals were measured and processed. For the following analysis, all of the signals were filtered with a second order low pass Butterworth filter at 15 Hz. The roll and steer accelerations were computed by numerically differentiating the roll and steer rate signals with a central differencing method except for the end points being handled by forward and backward differencing. The mean was subtracted from all the signals except the lateral force.

Identification¶

A simple analytic identification problem can be formulated from the canonical form. If we have good measurements of \(q\), their first and second derivatives, forward speed \(v\), and the inputs \(T_\delta\) and \(F\), the entries in \(\mathbf{M}\), \(\mathbf{C}_1\), \(\mathbf{K}_0\), \(\mathbf{K}_2\), and \(H\) can be identified by forming two simple regressions, i.e. one for each equation in the canonical form. I use the instantaneous speed at each time step rather than the mean over a run to improve accuracy with respect to the speed parameter as it has some variability.

The roll and steer equation each can be put into a simple linear form

where \(\Theta\) is a vector of the unknown coefficients and \(\mathbf{\Gamma}\) and \(Y\) are made up of the inputs and outputs measured during a run. \(\Theta\) can be all or a subset of the entries in the canonical matrices. If there are \(N\) samples in a run and we desire to find \(M\) entries in the equation, then \(\mathbf{\Gamma}\) is an \(N \times M\) matrix and \(Y\) is an \(N \times 1\) vector. The Moore-Penrose pseudo inverse can be employed to solve for \(\Theta\) analytically. The estimate of the unknown parameters is then

For example, if we fix the mass terms in the steer torque equation and let the rest be free the linear equation is

The error in the fit is